Stream DB V3

Discover more about the Stream DB V3 component and how to use it on the Digibee Integration Platform.

Stream DB V3 allows the establishment of a connection with a service that supports the JDBC (Java Database Connectivity) protocol and the execution of the SQL (Structured Query Language) instructions. To see all the databases supported by this component, read the Supported databases documentation.

Differently from the DB V1 component, Stream DB has been designed to make execution in batches, which means, each return (resulting line or row) of the executed SQL instruction is individually treated through a subpipeline, being able to have its own processing flow. Read the Subpipelines article to learn more.

Parameters

Take a look at the configuration parameters of the component. Parameters supported by Double Braces expressions are marked with (DB).

General tab

Account Type

Defines the Account type to be used by the component. Options are Basic, AWS V4, and Kerberos.

N/A

String

Account

Account to be used by the component to connect.

N/A

String

Fail On Error

If the option is activated, the pipeline's execution with an error will be interrupted. Otherwise, the pipeline execution proceeds, but the result will show a false value for the "success" property.

False

Boolean

Operation tab

Database URL

URL (Uniform Resource Locator) to establish connection to the database server with support to the JDBC protocol.

jdbc:mysql://35.223.175.97/db-training

String

SQL Statement

SQL instruction to be executed.

select * from clientes LIMIT 2

String

Column Name

If an error occurs while processing the onProcess subpipeline, the value associated with the column defined in this field will be added to the error message in a new field called "processedId" that can be handled by the onException subpipeline.

codigo

String

Parallel Execution Of Each Iteration

When activated, this option causes each one of the passes through the pipeline to be made in parallel, reducing the total execution time. However, there's no guarantee that the items will be executed in the order returned by the database.

False

Boolean

Blob As File

If activated, this option causes the blob-type field to be stored in the pipeline context as files; otherwise, the fields are stored as normal texts (strings) and coded in base64.

False

Boolean

Clob As File

If activated, this option causes the clob-type field to be stored in the pipeline context as files; otherwise, the fields are stored as normal texts (strings).

False

Boolean

Charset

This option will only be shown in case the Clob As File option is activated. This parameter allows you to set the Clob file encoding.

UTF-8

String

Custom Connection Properties

Specific connection properties defined by the user.

N/A

String

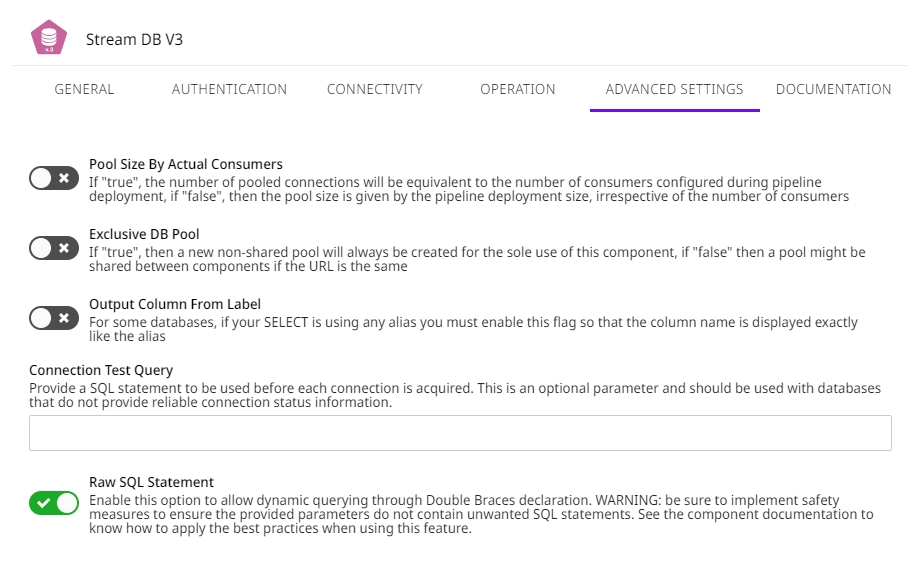

Advanced Settings tab

Pool Size By Actual Consumers

If the option is enabled, the number of pooled connections is equal to the number of consumers configured in the pipeline deployment. If the option is disabled, the pool size is determined by the pipeline deployment size, regardless of the number of consumers.

False

Boolean

Exclusive DB Pool

If the option is enabled, a new non-shared pool is always created for the sole use by this connectors. If the option is disabled, a pool might be shared between connectors if the URL, credentials, and custom connection properties are the same. Important: the custom connection properties must be defined in the exact same order between connectors for a pool to be shared, otherwise it will be considered as a different configuration and another pool will be created.

False

Boolean

Custom Pool

If the option is enabled, the base configuration for the connection pool will be set based on the Connection Maximum Lifetime, Minimum Idle Connections, and Idle Connection Timeout parameters. If the option is disabled, the pool will be configured based on the Keep Connection parameter. Important: this is an advanced feature and must be used carefully. See the dedicated section for the Connection Pool subject below.

False

Boolean

Connection Maximum Lifetime

Defines the maximum lifetime of a connection in the connection pool. An in-use connection will never be retired. It will be removed only when it is closed. The minimum value allowed is 30000 milliseconds (30 seconds). If a lower value is set, then the default of 1800000 milliseconds (30 minutes) will be used. This option is only available if the Custom Pool parameter is enabled.

N/A

Integer

Minimum Idle Connections

Defines the minimum number of idle connections to be maintained in the pool. The maximum value allowed is based on the deployment size, that is, 10, 20, or 40. If a higher value is set, the maximum for the current deployment size will be used. This option is only available if the Custom Pool parameter is enabled.

N/A

Integer

Idle Connection Timeout

Defines the maximum amount of time that a connection is allowed to sit idle in the pool. For this option to take effect: Its value must be lower than the one set in Connection Maximum Lifetime. The value configured in Minimum Idle Connections must be lower than the pool size (defined through Pool Size By Actual Consumers). This option is only available if the Custom Pool parameter is enabled.

N/A

Integer

Keep Connection

If the option is enabled, the connection pool will always maintain a minimum number of open connections ready to use. After 30 minutes, these connections will be renewed. The minimum number of open connections will be defined based on the Pool Size By Actual Consumers parameter. If the option is disabled, the pool will be created empty and connections will be opened on demand, being kept in the pool for no more than 5 minutes. In this case, the connections are not renewed. This option is only available if the Custom Pool parameter is disabled.

True

Boolean

Output Column From Label

For some databases, if your Select uses an alias, you must enable this flag so that the column name is displayed exactly like the alias.

False

Boolean

Connection Test Query

SQL statement to be used before each connection is established. This is an optional parameter and should be used with databases that do not provide reliable connection status information.

N/A

String

Raw SQL Statement (DB)

If the option is active, the SQL Statement parameter allows the use of dynamic queries through Double Braces statements. When using this functionality, you must ensure that the pipeline has security measures against unwanted SQL statements (SQL Injection). See more about this parameter in the section below.

False

Boolean

Documentation tab

Documentation

Section for documenting any necessary information about the connector configuration and business rules.

N/A

String

Parameters additional information

Column Name

See the following example about Column Name error message:

Blob As File

If Blob As File is active, the blob-type field is stored as follows:

Otherwise, the blob-type field is stored as follows:

Clob As File

If Clob As File is active, the clob-type field is stored as follows:

Otherwise, the clob-type field is stored as follows:

Raw SQL Statement

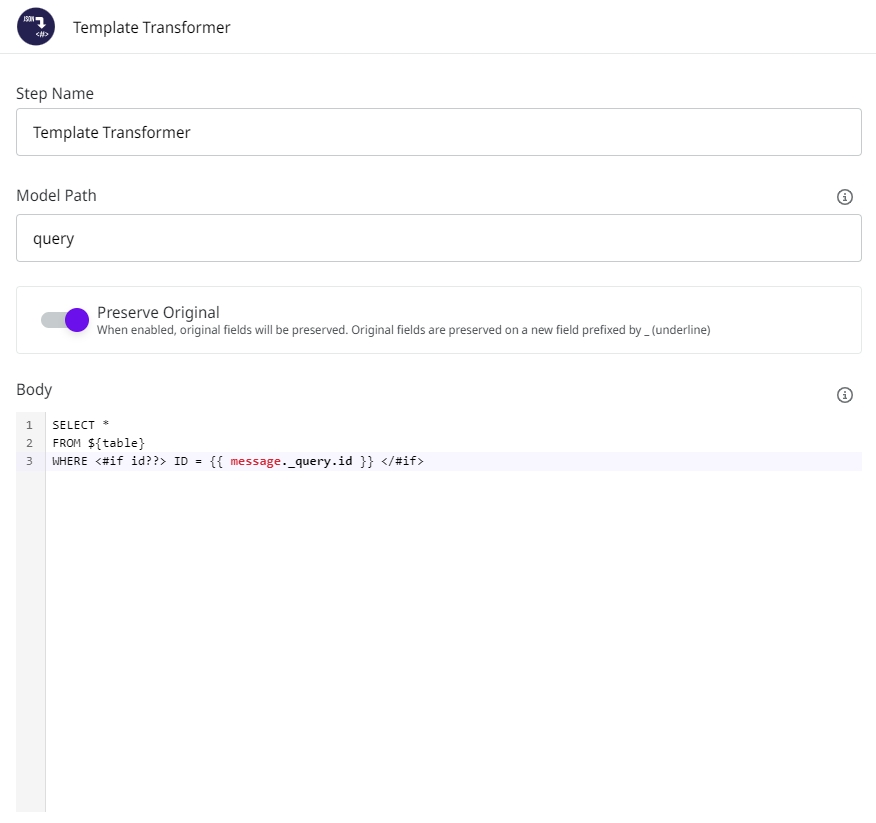

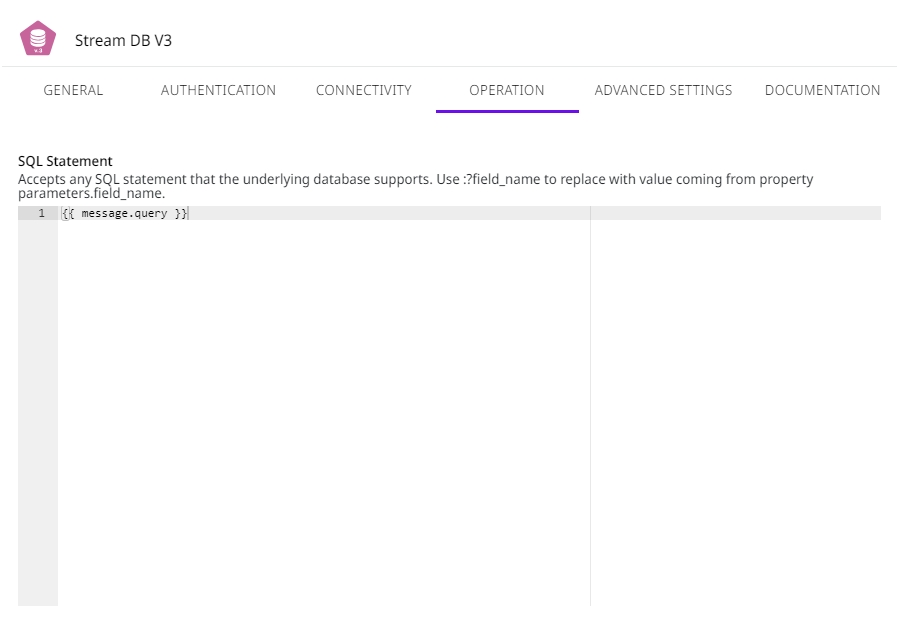

To bring more flexibility when using Stream DB V3, we can activate the Raw SQL Statement option, previously configure a query, and reference it through Double Braces in the SQL Statement parameter as follows:

Query previously defined via Template Transformer

Raw SQL Statement activation

Query referenced in SQL Statement parameter

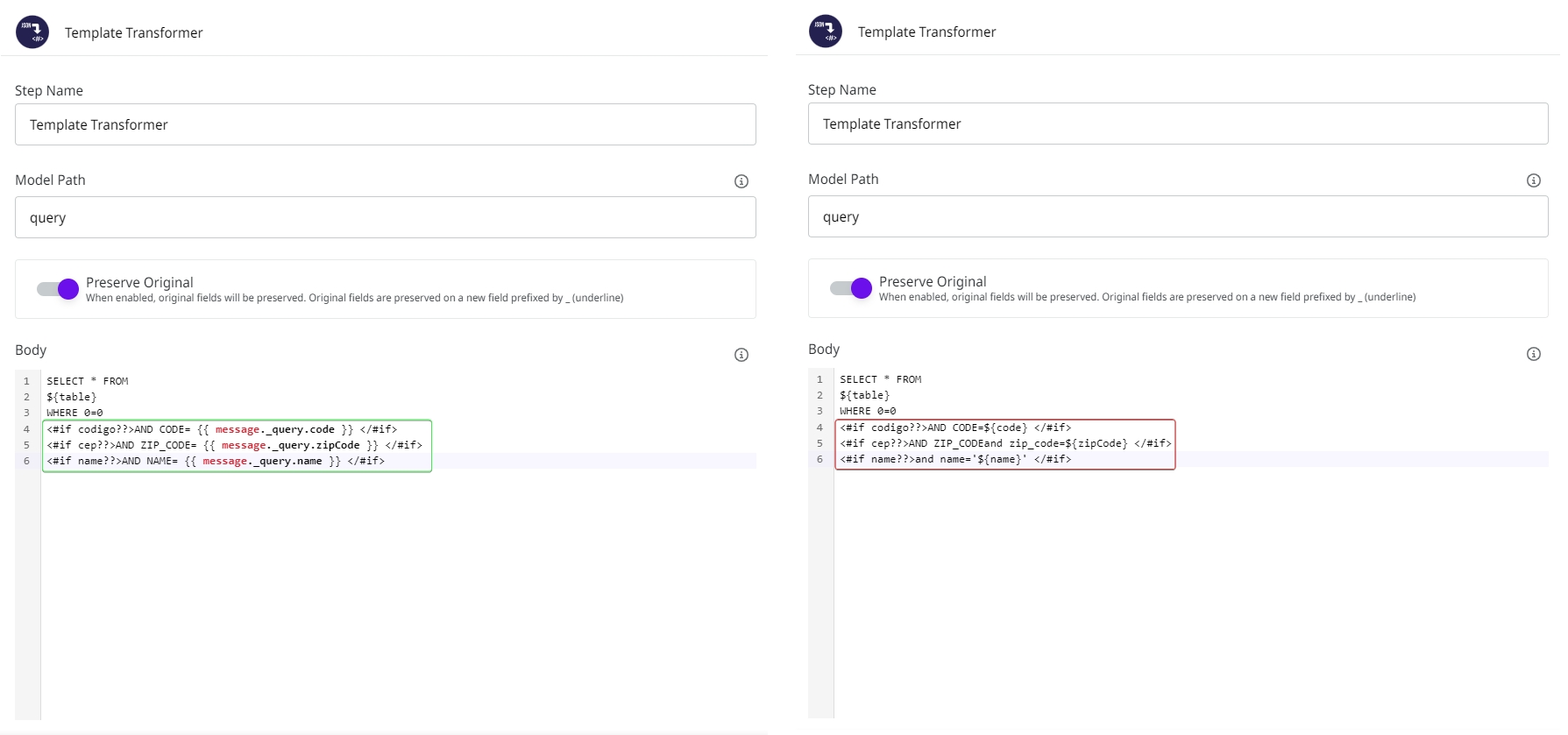

Important: as a good practice, we strongly recommend that, when activating the Raw SQL Statement option, the queries are previously defined using the Template Transformer component. The use of Template Transformer allows validating parameters through FreeMarker technology and also parameter declaration through Double Braces. These parameters are not resolved by Template Transformer but by the component Stream DB V3, which by default configures and validates the parameters of the SQL statement beforehand (PreparedStatement). By applying these security measures, you reduce the risks of attacks like SQL Injection.

In the image below, we have an example of recommended use of the component on the left (with the Double Braces in the WHERE clause, highlighted in green); and another example of non-recommended use on the right (with the FreeMarker in the WHERE clause, highlighted in red), which may pose risks to pipeline safety:

Technology

Authentication via Kerberos

To make an authentication to a database via Kerberos, it's necessary to:

inform a KERBEROS-type account;

set a main Kerberos principal;

set a keytab (that must be the base64 of the own generated keytab file).

Messages flow

Message structure available in the onProcess subpipeline

Once the SQL instruction is executed, the subpipeline will be triggered receiving the execution result through a message in the following structure:

Output with error

Output

After the component execution, a message is returned in the following structure:

total: total number of processed lines.

success: total number of successfully processed lines.

failed: total number of lines whose processing failed.

Stream DB V3 makes batch processing, which means processing the data continuously and in a controlled manner in smaller batches.

Connection pool

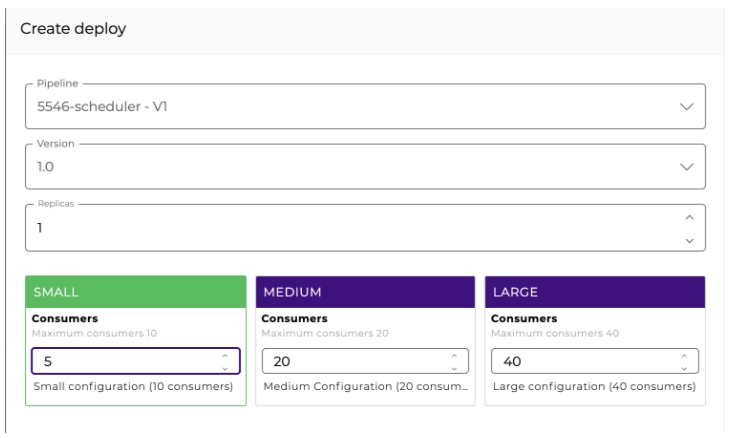

By standard, we use a pool based on the configurations of the deployed pipeline. If it's a Small pipeline, then the pool size will be 10; for the Medium it will be 20, and for the Large 40.

It's possible to manage the pool size during the implantation as well. For that, it will be necessary to enable the Pool Size By Actual Consumers parameter in the component. With it, it will be used whatever is manually configured in the implantation screen.

In the example of the image above, a SMALL pipeline with 5 consumers was configured. If you want the pool of the database components (DB V2 and Stream DB V3) to use this size, it's necessary to enable the Pool Size By Actual Consumers parameter in all the existing components.

Be extra careful when configuring the pool size manually so there's no deadlock in concurrent calls to the same database.

Our pool is shared between the database components that access the same database inside the pipeline. If you need an exclusive pool for a determined component, enable the Exclusive DB Pool parameter.

Customizing the Connection Pool

By default, the connector defines how the connections in the pool are handled based on the Keep Connection parameter. This configuration aims to facilitate the connection pool configuration for scenarios in which, for example, high availability is necessary or the opposite is the case.

If enabled, the connections in the pool are kept open all the time and renewed after 30 minutes. This could be used for high availability scenarios reducing the cost of frequently opening new connections.

However, if we have the opposite scenario, where connections are needed between longer intervals of time, we can disable the parameter to configure the pool without open connections and only open it when it is really necessary. After the connection has been used, it is kept in the pool for no more than 5 minutes and is not renewed.

Although this means you don’t have to worry about configuring the connection pool, this default option may not be the best for some use cases.

For more flexibility on the pool configuration, you can enable the Custom Pool option to ignore the default configuration and set a custom configuration.

In this case, you need to set the three parameters below (which are implicitly defined in the default configuration):

Connection Maximum Lifetime

Minimum Idle Connections

Idle Connection Timeout

Customizing a connection pool can be a difficult task as it requires a deeper knowledge of the subject. Besides, when applied to the context of the Digibee Integration Platform, you have to consider the variables that can affect the pool performance.

Things like the deployment size and its replicas, access to the same databases using distinct credentials and properties on DB connectors inside the same and different pipelines, the exclusive DB pool option on DB connectors, directly affect how the connection pool should be configured.

Based on all the mentioned above, it is highly recommended that you only enable the Custom Pool option if you have some knowledge on the topic and if it is really necessary.

Last updated

Was this helpful?