DB V2: Usage scenarios

Learn more about practical usage scenarios for the DB V2 connector, ranging from defining personalized connection properties to handling custom data types.

DB V2 is a connector that supports SELECT, INSERT, DELETE, and UPDATE operations, as well as procedure calls, returning results in a JSON structure. For the full list of supported databases, see the Supported databases documentation.

This document outlines various usage scenarios for the connector, ranging from defining personalized connection properties to handling custom data types.

Explore the supported usage scenarios below:

Configuring a QUERY operation with a SELECT statement

Use DB V2 to run a SELECT query when you need to retrieve data from a configured database.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

SELECT * FROM CLIENTS.

After executing the pipeline, the output will be:

{

"data": [

{

"uf": "SP",

"codigo": 1,

"cidade": "Osasco",

"logradouro": "Rua Laranjeiras",

"name": "João Bosco",

"due_date": "2019-03-27",

"email": "[email protected]",

"cep": "XXXXX-XXX"

},

{

"uf": "SP",

"codigo": 2,

"cidade": "São Paulo",

"logradouro": "Rua João Bosco",

"name": "Roberto Carlos",

"due_date": "2019-03-27",

"email": "[email protected]",

"cep": "XXXXX-XXX"

}

],

"updateCount": 0,

"rowCount": 2

}Understanding the output

The query returns a JSON object with three root properties:

data: An array of objects representing the rows by the query. Each object contains properties corresponding to the columns in theSELECTstatement. For example, if the query isSELECT name FROM CLIENTS, each object in the array will have a singlenameproperty.updateCount: The number of rows affected by the query. In this case, the value is0because aSELECToperation doesn’t modify the table.rowCount: The number of rows returned by the query.

Configuring the QUERY operation with an UPDATE statement

Use DB V2 to update records in your database by executing an UPDATE query.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

UPDATE CLIENTS SET uf='SP' WHERE uf is null.

After executing the pipeline, the output will be:

{

"data": null,

"updateCount": 5,

"rowCount": 0

}Understanding the output

updateCount: Confirms that five rows in the table were modified in this example.

Configuring the QUERY operation with a DELETE statement

Use DB V2 to remove data from your database with a DELETE query.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

DELETE FROM CLIENTS WHERE code='111111'.

After executing the pipeline, the output will be:

{

"data": null,

"updateCount": 1,

"rowCount": 0

}Understanding the output

updateCount: Confirms that one row in the table was deleted in this example.

Configuring the QUERY operation with an INSERT statement

Use DB V2 to insert new records into your database using an INSERT query.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

INSERT INTO CLIENTS (name, email) VALUES ('My Name', '[email protected]').

After executing the pipeline, the output will be:

{

"data": null,

"updateCount": 1,

"rowCount": 0

}Understanding the output

updateCount: Confirms that one row in the table was created in this example.

Keeping the connection active for older or unreliable drivers

If your database is incompatible with JDBC 4.0 or struggles to maintain a reliable connection, you can use DB V2 to execute any query — even if the same call is made again after 20 minutes. This ensures that the database connection remains active.

In the Advanced Settings tab, you can configure the Connection Test Query. This query runs before the one specified in the SQL Statement parameter, ensuring that the database connection is established and preventing errors.

If the connection test fails, a new connection will be opened automatically. This helps prevent issues such as "stuck" connections and timeouts caused by previous failed queries.

This option should only be considered for older databases that support JDBC versions earlier than 4.0.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

INSERT INTO CLIENTS (name, email) VALUES ('My Name', '[email protected]').

Advanced Settings tab:

Connection Test Query: Enter a query to test the connection, such as

SELECT version().

After executing the pipeline, the query result will be shown in the Output column of the Execution panel.

Keeping the connection active for 5 or 30 minutes

Use DB V2 to keep your database connection open for 5 or 30 minutes, depending on your needs.

Configure the connector as follows:

Advanced Settings tab:

Keep Connection: Enable this option to extend the default 5-minute connection time to 30 minutes, ensuring a longer active session with the database.

Interrupting pipeline execution in case of an error

Use DB V2 to control pipeline execution by interrupting it when an error occurs during a database operation.

To control how errors impact execution, configure the connector as follows:

General tab:

Fail On Error: Enable this option to stop the pipeline if an error occurs while invoking DB V2. If disabled, the pipeline will continue running even if the operation fails.

Accessing and writing files into the database

Use DB V2 to read from or write files into BLOB or CLOB fields in your database. This allows you to:

Write files from the pipeline into BLOB or CLOB columns in the database.

Read BLOB or CLOB data from the database as files within the pipeline.

By enabling the Blob As File or Clob As File option, you can specify a file path for these operations:

When using

INSERT, providing a file path from the pipeline stores the file in the database.When using

SELECT, the retrieved BLOB or CLOB content is converted into a file within the pipeline.

Step 1: Read the file

To read the file, connect DB V2 with the trigger and configure it as follows:

General tab:

Step Name: Name it as “Read File” or similar.

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

SELECT blob FROM CLIENTES WHERE id=78.Blob As File / Clob As File: Enable one or both options. If Clob As File is enabled, the Charset parameter will appear for defining the file encoding.

After executing the pipeline, the output will be:

Two files, E4G17F.file and H2K89G.file, were generated and made available in the pipeline.

Step 2: Write the file

To write the file, connect another DB V2 with the “Read File” connector and configure it as follows:

General tab:

Step Name: Name it as “Write File” or similar.

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, for example

INSERT INTO CLIENTS (blob, clob) VALUES ( {{ message.data.blob }}, {{ message.data.clob }} ).

This example uses Double Braces expressions ({{ message.data.blob }}, {{ message.data.clob }}) to reference the query result from the previous connector. These expressions retrieve the generated file names (E4G17F.file, H2K89G.file) and pass their paths into the INSERT command for the blob and clob fields.

Blob As File / Clob As File: Enable one or both options. If Clob As File is enabled, the Charset parameter will appear for defining the file encoding.

Type Properties: Click Add and provide the following details:

Key:

0(each Double Braces expression has an index;0refers to the first one)Type:

BLOBOut Parameter Name: (leave empty)

Parameter Type:

IN

After executing the pipeline, the output will be:

Understanding the output

The result indicates that one row in the table was affected. First, the Read File connector retrieved the BLOB and CLOB content from the database and generated files. Then, the Write File connector used these files from the pipeline and inserted them into the database as a new record.

Executing a procedure

Use DB V2 to call and run a stored procedure in your database and retrieve its result.

Example

In this example, the database contains the following procedure:

To execute this procedure in your pipeline, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

PROCEDURE.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, such as

call proc_sum_one({{ message.num }}).

In this example, we use the Double Braces expression {{ message.num }} to access a value from the previous connector. For more details, read the documentation.

Type Properties: Click Add and provide the following details:

Key:

0(each Double Braces expression has an index;0refers to the first one)Type:

INTEGEROut Parameter Name:

result(defines the name of the procedure’s output parameter)Parameter Type:

INOUT

Once the connector is configured and saved, open the Execution panel and provide an input value in the Payload column, such as:

After executing the pipeline, the output will be:

Defining personalized connection properties

Use DB V2 to define personalized connection properties, customizing how the connector interacts with your database.

To do this, connect DB V2 to the trigger and configure it as follows:

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Enter the SQL Statement, such as

SELECT * FROM CLIENTS.Custom Connection Properties: Enter the custom connection properties. For example,

{ "connectTimeout": 1 }

After executing the pipeline, the output will be:

In this case, the connectTimeout connection property was set to 1 (one second) to demonstrate that it was applied and resulted in a connection error.

Reading custom data types from a Oracle database

Use DB V2 to read custom data types from an Oracle database when a configured table contains this type of data.

Example:

Suppose you have a table created with the following structure:

And the OWNER custom type is defined as:

When executing the following query:

The result will be:

If the custom type includes other custom types within its structure, the returned data will be nested, as shown in the example below:

Custom types don’t expose the field names defined in their structure; only the order of those fields is preserved.

In the example above, the first element of the "owner" array corresponds to the "name" field and the second element to the ”email” field, as defined in the OWNER type.

When working with custom types in PROCEDURE queries, you must set the Out Parameter Name to match the name of the custom type. In this case, that would be OWNER.

Sending custom data types from a Oracle database

Use DB V2 to insert custom data types (structs) into a table in an Oracle database.

The DB V2 connector doesn’t support Double Braces expression to resolve custom types (struct) when using INSERT or UPDATE commands.

Example

The following custom types and tables are created in the database:

Custom types:

Table:

To insert data into the EMPLOYEE table, you can use the following approaches:

Example input:

Using an

INSERTstatement from aSELECTquery:

Using a previously created

PROCEDURE:

Using a full SQL Script:

Using Raw SQL statement to handle conditional queries

Suppose your integration flow needs to execute one of two different SQL queries depending on business logic:

Query 1:

Query 2:

The DB V2 connector supports the Raw SQL Statement feature, allowing you to reference an entire query dynamically using Double Braces. This means you can define the full query in a previous connector and pass it as a variable into DB V2, adapting it at runtime.

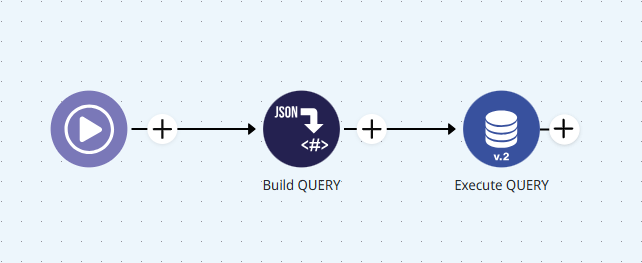

To use this feature, you must configure the SQL query in a connector like Template Transformer and then reference it in DB V2.

Step-by-step example

Step 1: Configure the pipeline

Add a Template Transformer and a DB V2 connector to your pipeline.

Step 2: Configure the Template Transformer connector

Preserve Original: Enable this option.

Body: Set the following body:

Step 3: Configure the DB V2 connector

General tab:

Account Type: Select the type of account you configured.

Account: Choose the account used to authenticate with the database.

Operation tab:

Type: Select

QUERY.Database URL: Enter your database URL, such as

jdbc:mysql://myserver/mydb.SQL Statement: Set the SQL statement to:

{{ message.query }}

Advanced Settings tab:

Raw SQL Statement: Enable this option.

Step 4: Test the execution

On the Execution panel, test the following input payloads:

Payload for Query 1:

Payload for Query 2:

The expected results for each query are:

Result for Query 1:

Result for Query 2:

Understanding the output

The query result is returned as a JSON with three main properties:

data: An array of objects representing each row returned. Each object contains the columns selected by the query.Example: If your query is

SELECT name FROM TABLE_1 WHERE ID = 123, each object in thedataarray will contain only thenameproperty.

updateCount: The number of rows affected by the SQL command. ForSELECToperations, this is always0, as no table is affected.rowCount: The number of rows returned by the query.

Although other connectors like JSON Generator or Transformer (JOLT) can also be used to define the query string, Template Transformer offers some important advantages:

Uses FreeMarker technology to validate data before executing the target query.

Helps prevent SQL Injection: the Double Braces declaration is not resolved by Template Transformer itself, but by DB V2, which configures and validades the SQL statement parameters in advance using Prepared Statements.

Provides a safer and easier way to create queries in your pipeline.

Last updated

Was this helpful?