Pipeline Engine

Learn how the Pipeline Engine interprets and runs your integration pipelines.

The Pipeline Engine of the Digibee Integration Platform is responsible for interpreting and executing pipelines created through the interface.

Take a look at the concepts to better understand how the Pipeline Engine works:

Pipeline Engine: Responsible for the execution of the flows built on the Digibee Integration Platform.

Trigger: Receives invocations from different technologies and forwards them to Pipeline Engine.

Queues mechanism: Central mechanism for managing queues on the Digibee Integration Platform.

Operation architecture

Each flow (pipeline) is converted into a Docker container, executed with the Kubernetes technology — base of the Digibee Integration Platform. This operation model has guarantees such as:

Isolation: Each container runs on the infrastructure individually. Memory usage and CPU consumption vary for each pipeline.

Safety: Pipelines don’t interfere with each other, unless this interaction is intentionally configured through the interfaces provided by the Digibee Integration Platform.

Specific scalability: You can adjust the number of pipeline replicas based on performance needs — scaling up for higher demand or scaling down when less processing power is required.

Key concepts

Pipeline sizes: For vertical scalability

Digibee uses the serverless concept so you don't need to worry about infrastructure details for the execution of your pipelines. That way, each pipeline must have its size defined during the deployment. The Digibee Integration Platform allows the pipelines to be used in 3 different sizes:

SMALL: 10 consumers, 20% of 1 CPU, 64 MB memory

MEDIUM: 20 consumers, 40% of 1 CPU, 128 MB memory

LARGE: 40 consumers, 80% of 1 CPU, 256 MB memory

Consumers

Consumers define how many concurrent messages a pipeline can process. For example, a pipeline with 10 consumers can handle 10 messages in parallel, while 1 with a single consumer processes messages sequentially.

Resources (CPU and Memory)

Pipeline size also defines the performance and memory allocation. The performance is defined by the cycle quantity of a CPU to which the pipeline has access to.

CPU: Controls how many cycles the pipeline can use.

Memory: Defines the amount of memory available to process and store data during execution.

Replicas: For horizontal scalability

Triggers act as the messages entry point for pipeline execution on the Digibee Integration Platform. These messages are routed to the queue mechanism and available for the pipeline’s consumption.

When deploying a pipeline, you can configure the number of replicas. For example, a SMALL pipeline with 2 replicas has twice the processing capacity compared to one with a single replica. The replicas not only provide more processing and scalability, but also guarantee higher availability. For example, if one of the replicas fails, there are others to take over.

Usage examples

While multiple SMALL pipelines can match the throughput of a LARGE pipeline in some cases, workloads involving large message processing may require vertically scaled pipelines (for example MEDIUM or LARGE).

Even if a pipeline of LARGE size is equivalent to 4 pipelines of SMALL size according to the logic of the infrastructure, it doesn't mean that they are equivalent for all workloads. In many situations, especially those involving the processing of large messages, only pipelines that are scaled vertically can deliver the expected results.

Timeouts and Expiration

Triggers can be configured with the following time controls:

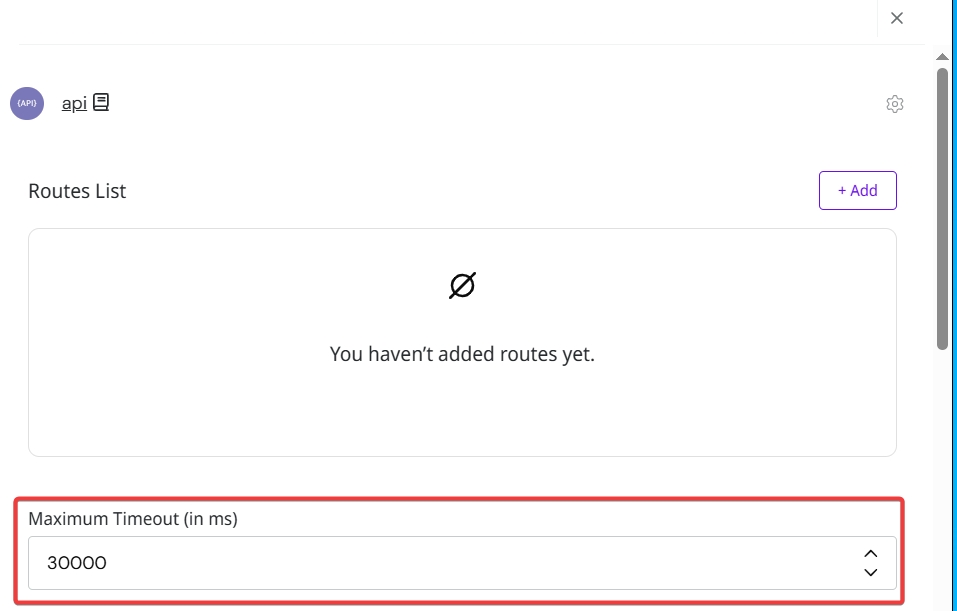

Timeout: Maximum time the trigger waits for a pipeline response.

Expiration: Maximum time a message can stay in the queue before being picked up.

Timeout

The trigger timeout should be greater than the sum of the timeouts of all external connectors. This ensures that the pipeline has enough time to handle potential errors before it’s terminated. Check out these tips for setting timeout values.

Expiration

Not all triggers support expiration configuration. For example:

Event Trigger (asynchronous) can hold messages in the queue longer, making it useful to define the expiration time.

REST Trigger (synchronous) rely on immediate responses, so expiration is not useful to configure the expiration time.

Internally, the Platform assigns a non-configurable expiration for synchronous triggers to prevent message loss.

Execution control

Message processing occurs per consumer, sequentially. If a pipeline is deployed with only 1 consumer, it processes one message at a time. While a message is being processed:

It’s marked as "in use".

Other replicas won’t process it concurrently.

If the pipeline crashes or is restarted, the message in execution is returned to the queue for reprocessing.

Messages returned to the processing queue

Messages returned to the processing queue become available for the consumption of other pipeline replicas or even by the same replica after restart. In this case, it’s possible to configure the pipeline to determine what to do when messages need to be reprocessed. All triggers in the Platform have a configuration called Allow Redeliveries:

If enabled: The message can be reprocessed.

If disabled: The message is identified as a retry and rejected with an error.

The retry window is defined by:

Maximum timeout (for synchronous triggers)

Expiration time (for asynchronous triggers)

You can also detect if a message is being reprocessed using Double Braces by accessing the metadata scope. For example:

FAQ

Last updated

Was this helpful?