Autoscaling

Learn how the Autoscaling technology works on Digibee Integration Platform

Autoscaling features are only available for realms using the Consumption-Based model.

If your realm uses this model, it is highly recommended to activate Autoscaling to benefit from automatic elasticity, which can reduce consumption costs.

What is autoscaling

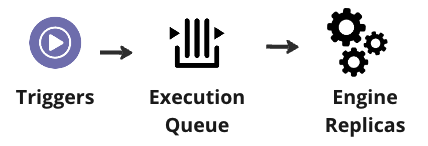

Autoscaling is the process of adjusting computational resources based on workload. Pipelines are horizontally scalable, meaning that replicas can be dynamically created or removed depending on the number of messages (invocations) in the queue.

The Digibee Integration Platform continuously monitors the queue and adjusts the number of replicas to ensure optimal resource usage and efficient processing, regardless of the trigger type that generated the messages.

How autoscaling works

Every pipeline execution starts with a trigger. Different trigger types, such as Scheduler, Event or HTTP, have unique behaviors and activation criteria. These triggers determine whether a message is sent to the execution queue for further processing or whether it should be skipped or rejected altogether.

Autoscaling algorithm

The autoscaling algorithm scales pipelines horizontally. Every 10 seconds, the Platform monitors the system and can execute the following commands:

Activation (from 0 to 1)

If the minimum number of replicas is set to zero, the pipeline can receive messages but has no replicas running.

The first replica is deployed when the first message arrives, which can add latency.

The minimum startup time is 20 seconds.

Once initialized, the replica processes the first and subsequent messages.

The number and type of connectors in the pipeline can impact startup time.

Scale up (from 1 to N)

When the number of messages in the queue exceeds 70% of total concurrent executions, the Platform deploys additional replicas.

Scale down (from N to 1)

The Platform reduces replicas when fewer resources are needed. The system calculates the required number of replicas using the formula below:

Scale-to-Zero (Cooldown)

If the minimum replica is zero and a single replica is idle for more than 60 seconds, the pipeline scales down to zero. New replicas are deployed only when new messages arrive.

Custom Cooldown: You can configure a custom cooldown time between 60 and 300 seconds in the pipeline’s Advanced Settings. This defines how long the system waits before scaling back to zero after idle.

Autoscaling on the Digibee Integration Platform

The autoscaling process on the Digibee Integration Platform follows these steps:

Phase 1: Activation of the first replica

The system executes an Activation command to create and start the first replica.

This command triggers the processing of the first message in the queue.

Components for pipeline processing are initialized only when the replica is deployed, that is, when messages arrive.

Since the last step can add latency to the first message’s total response time, it’s recommended to set min replicas ≥ 1 for latency-sensitive pipelines.

Phase 2: Processing of messages

After the first replica is deployed and initialized, the Platform processes the first set of messages according to the concurrency settings.

When new messages arrive:

They are processed by available consumers if capacity allows.

Otherwise, a Scale Up command starts a new replica.

Scale Up impact is smaller on total response time because it triggers only when the queue reaches 70% of concurrent execution capacity.

Total processing capacity formula:

Phase 3: Scaling down

If the queue has only a few messages, the Platform reduces the number of running replicas until only one remains.

If the single replica remains idle for more than 60 seconds, a Cooldown command scales the pipeline down to zero replicas.

New replicas are deployed only when the next set of messages arrives.

Autoscaling strategies

There are two types of autoscaling deployment strategies on the Digibee Integration Platform: Scale-to-Zero and Always On.

Scale-to-Zero

The pipeline is deployed with autoscaling enabled and a minimum of zero replicas. Pipelines can receive new requests but operate with “zero replicas”, incurring no cost until the first message is received.

Recommended usage scenario

This strategy is ideal for workloads that don’t require immediate response for the first message. For example, a pipeline that runs once a day can remain inactive most of the time.

See more scenarios where Scale-to-Zero is applicable:

Scheduled pipelines using the Scheduler trigger.

Low-criticality workloads where the first message can tolerate extra latency.

Workloads aiming to maximize cost savings during idle periods.

Always On

The pipeline is deployed with autoscaling enabled and a minimum of 1 or more replicas. At least one replica is always running, ready to process the first messages immediately.

Recommended usage scenario

This strategy ensures low-latency response even during low traffic periods. Always On is applicable on the following scenarios:

High-criticality workloads where extra response time for the first message is not acceptable.

Optimization tip: Replace a Large pipeline with 1 replica and no autoscaling with a Small pipeline with autoscaling enabled, using 1 to 4 replicas.

Last updated

Was this helpful?