How to send pipeline logs to external monitoring systems

Learn how to export integration pipeline logs to external monitoring systems outside the Digibee Integration Platform.

Logs help you understand what happened during pipeline executions and troubleshoot unexpected behavior. You can view them directly on the Pipeline Logs page in Monitor.

However, if you need to monitor logs using external monitoring systems like ElasticSearch, Kibana, Graylog, or Splunk, you can build dedicated flows using the Log Stream pattern.

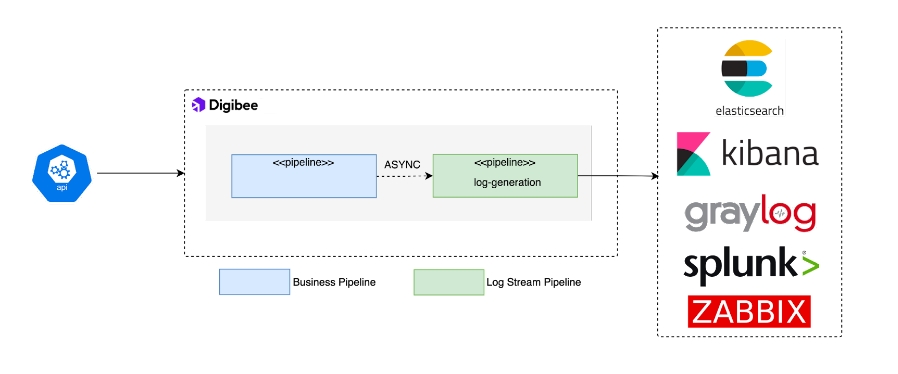

Architecture: Log Stream pattern

The Log Stream pattern uses Digibee’s internal Event Broker to decouple log transmission from the main flow and avoid overloading or delaying the primary integration execution. This pattern involves splitting your flow into two pipelines:

Business Rule pipeline: Handles core business logic and generates log events.

Log Stream pipeline: Listens to events and exports logs to the desired external services.

See how each of these pipelines should be configured below.

Create the Business Rule pipeline

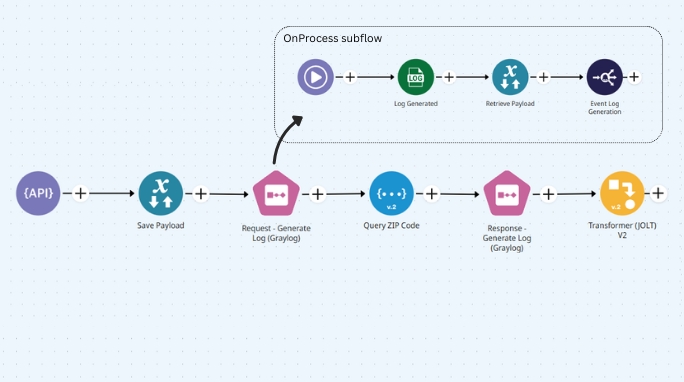

This pipeline defines the main flow logic and generates log events.

Where you would typically place a Log connector, follow the steps below to improve log management using the Log Stream pattern:

Use the Session Management connector (in “put data” mode) to store key values from the pipeline context, such as user info or error details, that you will later retrieve before publishing logs. This ensures all relevant log data is available when triggering the Log Stream pipeline.

The Block Execution connector creates two subflows:

OnProcess (mandatory): The main execution path.

OnException: Triggered only when an error occurs in the OnProcess subflow.

Inside OnProcess, add the following:

A Session Management connector (in “get data” mode) to retrieve previously stored context

An Event Publisher to trigger the Log Stream pipeline

Configure the Event Publisher to send the desired log payload, for example:

Tip: Use variables like {{ metadata.$ }} to extract data from the flow and the pipeline and facilitate analysis in a troubleshooting process. Learn more about referencing metadata with Double Braces.

Inside OnException, add a Throw Error connector to avoid silent failures.

The resulting structure in your Business Rule pipeline (where a Log connector would normally be used) should look like this:

Set up the Log Stream pipeline

This pipeline receives and processes log events. Follow these steps to configure it:

Set the pipeline’s trigger to Event Trigger and configure it to match the event name used in the Event Publisher in the Business Rule pipeline. This ensures the Log Stream pipeline is activated whenever a log event is published.

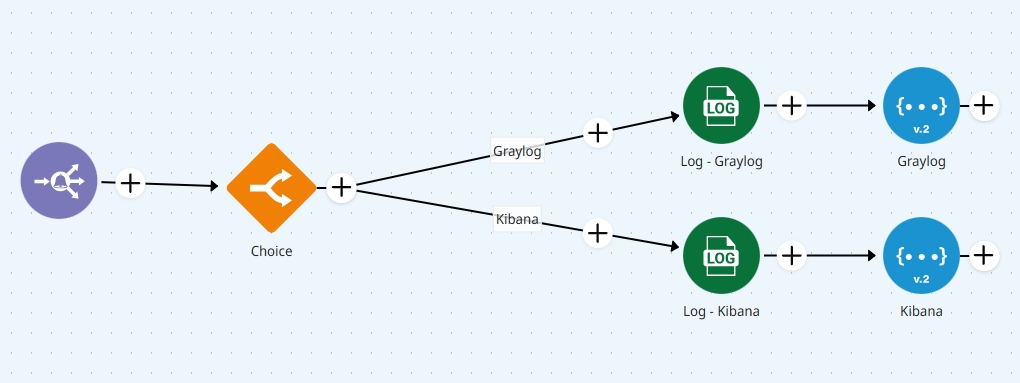

Use connectors like REST V2 or SOAP V3 to send log data to external tools. You can:

Send logs to multiple destinations

Route logs conditionally using a Choice connector

In the example below, a Choice connector directs logs to either Graylog or Kibana via a REST V2 connector:

Avoid placing too many Log connectors in your pipeline. It can degrade performance and increase the required deployment size.

Additional information

Best practices for external log calls

Wrap external calls in a Block Execution connector.

OnProcess: Use the REST connector and validate responses with a Choice connector followed by a Throw Error, or use an Assert connector.

OnException: Send alerts for failed deliveries using Email V2 followed by a Throw Error.

Enable retry logic in the REST V2 connector’s Advanced Settings.

Mask or redact any sensitive information (like PII, credentials, tokens).

Use field-level controls or Capsules to enforce compliance with data protection policies.

Learn more about Key practices for securing sensitive information in pipelines with Digibee.

Alternative: Use capsules

Instead of creating a dedicated Log Stream pipeline, consider using Capsules to centralize and reuse log-handling logic.

Learn more about using Capsules.

Practice challenge

Put your knowledge into practice! Try the Log Stream Pattern Challenge on Digibee Academy.

Last updated

Was this helpful?