REST Trigger

Learn more about the REST Trigger and how to use it on the Digibee Integration Platform.

When a pipeline is configured and published with REST Trigger, a REST endpoint is automatically created. You can visualize this endpoint after the deployment - just click on the pipeline card in the Run screen.

With this trigger, you can create APIs that meet the REST standard and quickly define which methods your endpoint will answer to.

Parameters

Take a look at the configuration parameters of the trigger. Parameters supported by Double Braces expressions are marked with (DB).

Methods

Configures the HTTP verbs to be supported by the endpoint after the deployment. If no value is informed, the default value will be considered.

POST, PUT, GET, PATCH, DELETE and OPTIONS

String

Maximum Timeout

Limit time (in milliseconds) for the pipeline to process information before returning a response. Limit: 900000

30000

Integer

Maximum Request Size

Maximum size of the payload (in MB). The maximum size of the configurable payload is 5MB.

5

Integer

Response Headers (DB)

Headers to be returned by the endpoint when processing in the pipeline is complete. Cannot be left empty. Accepts Double Braces.

N/A

String

Add Cross-Origin Resource Sharing (CORS)

Add the CORS headers to be returned by the endpoint when processing in the pipeline is complete.

False

Boolean

CORS Headers

Specifies CORS for the pipeline.

N/A

Key-value pair

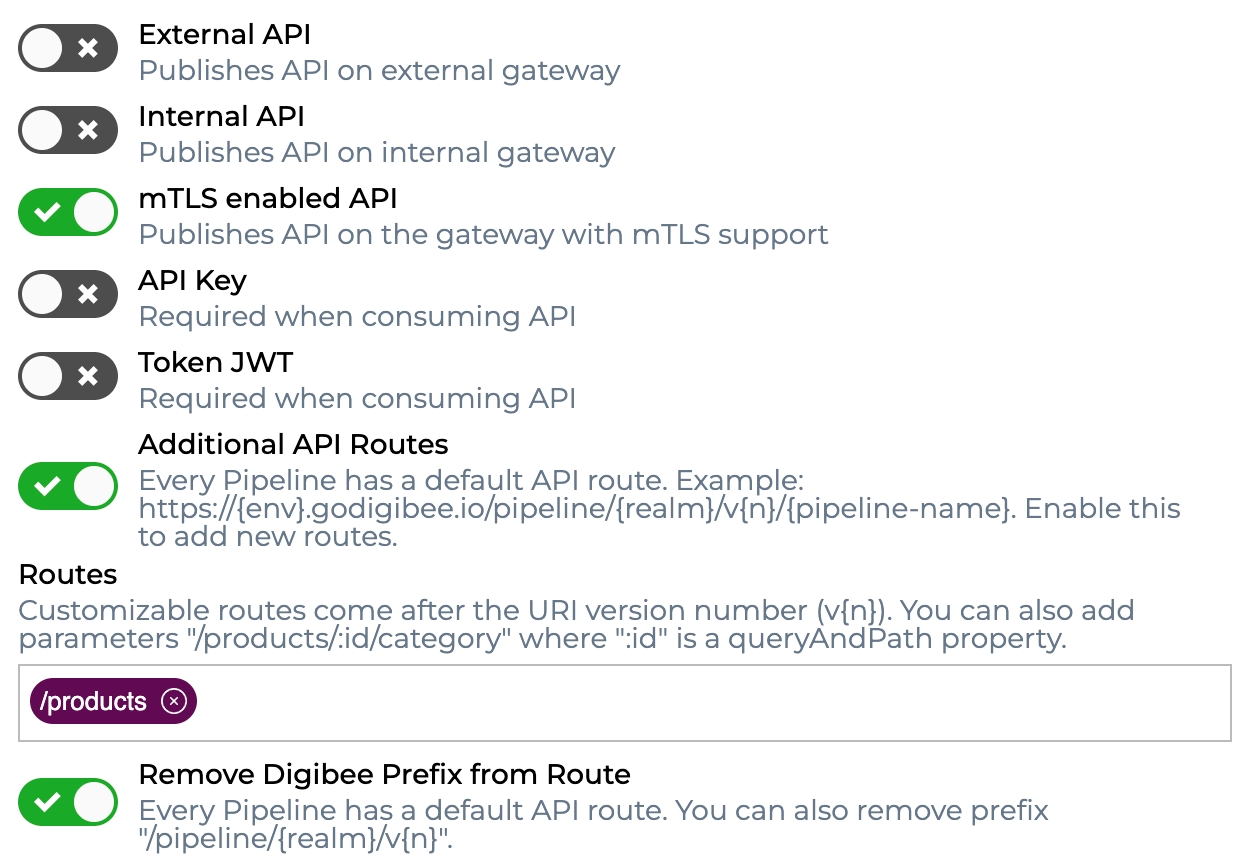

External API

If enabled, publishes the API in an external gateway.

True

Boolean

Internal API

If enabled, publishes the API in an internal gateway. The pipeline can have both the External API and Internal API options enabled simultaneously.

False

Boolean

mTLS enabled API

If enabled, publishes the API to a dedicated gateway with mTLS enabled by default.

False

Boolean

API Key

If enabled, the endpoint can only be consumed if an API key is configured in the Digibee Integration Platform.

False

Boolean

Token JWT

If enabled, the endpoint can only be consumed if a JWT token previously generated by another endpoint is sent. Read the article about JWT implementation to have more details.

False

Boolean

Basic Auth

If enabled, the endpoint can only be consumed if a Basic Auth setting is present in the request. This setting can be registered beforehand through the Consumers page in the Digibee Integration Platform.

False

Boolean

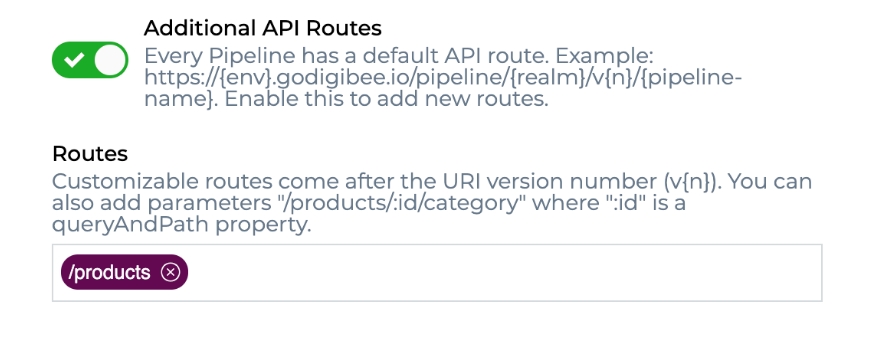

Additional API Routes

If enabled, allows configuration of new routes for the trigger. See more about this parameter in the section below.

False

Boolean

Remove Digibee Prefix from Route

Removes the default Digibee route prefix if certain conditions are met. Learn more about the Remove Digibee Prefix from Route parameter in the section below.

False

Boolean

Routes

Displayed when the Additional API Routes parameter is enabled; used to define additional endpoint routes.

N/A

String

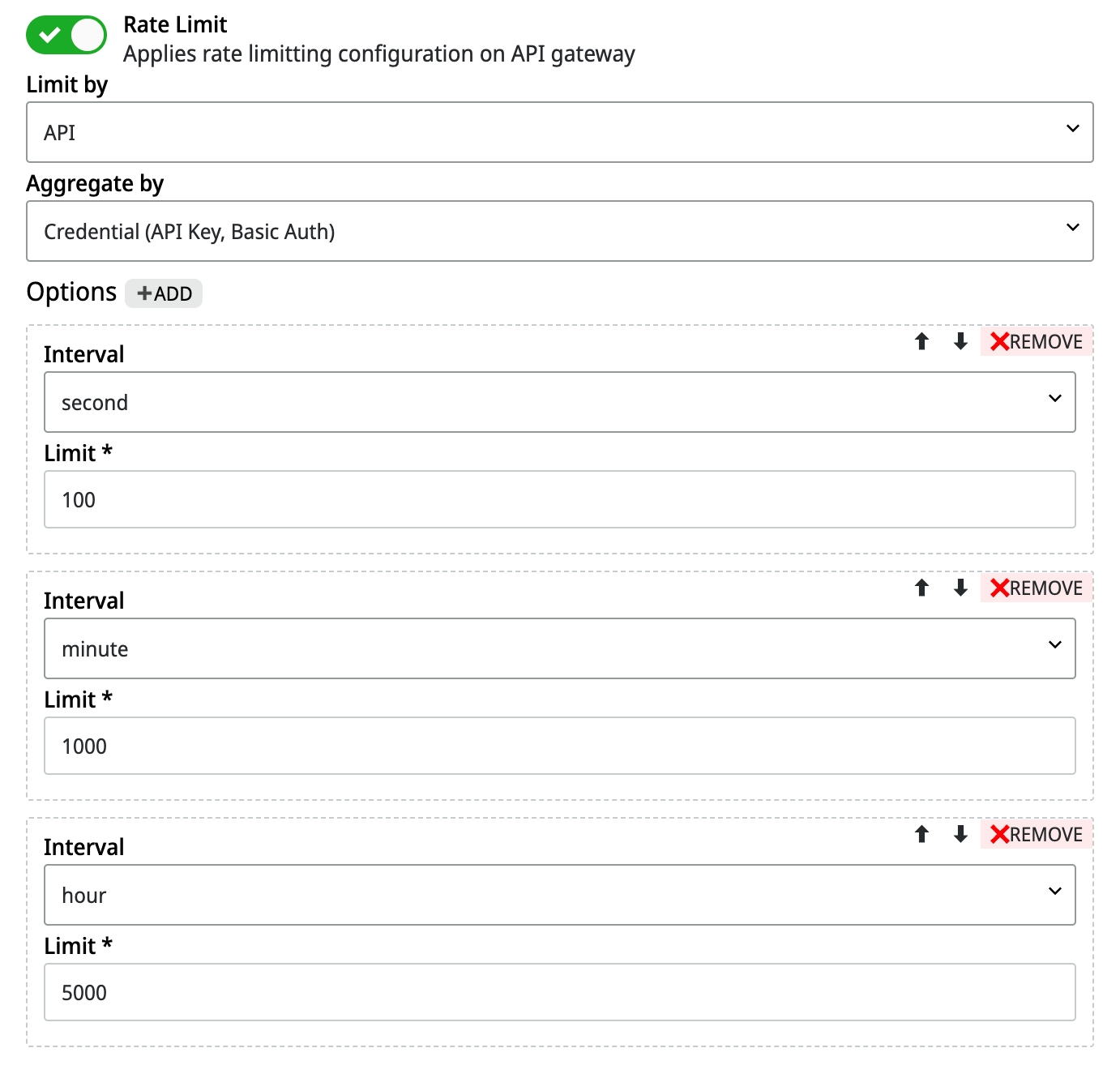

Rate Limit

If activated, applies a rate limiting configuration on the API gateway. Available if API Key or Basic Auth is active.

False

Boolean

Limit by

Defines the entity to which the limits will be applied. Options: API.

API

String

Aggregate by

Defines the entity for aggregating the limits. Options: Consumer and Credential (API Key, Basic Auth).

Consumer

String

Options

Defines the limit of requests that can be made within a time interval.

N/A

Options of Rate Limit

Interval

Defines the time interval for the limit of requests. Options: second, minute, hour, day, and month.

Second

String

Limit

Defines the maximum number of requests that users can make in the specified time interval.

N/A

Integer

Allow Redelivery Of Messages

If enabled, allows the message to be resent if the Pipeline Engine fails. Read the article about the Pipeline Engine to have more details.

False

Boolean

Parameters additional information

Maximum Request Size

If the payload sent by the endpoint consumer goes beyond the limit, a message will be returned informing that the maximum size has been overcome and a status-code 413 with the following message:

Add Cross-Origin Resource Sharing (CORS) - CORS Headers

Cross-Origin Resource Sharing (CORS) is a mechanism that lets you tell the browser which origins are allowed to make requests. This parameter defines CORS specifically for the pipeline and its constraints. To configure globally rather than individually on each pipeline see the CORS HTTP header policy.

We use a comma to enter multiple values in a header, but we don't add a space before or after the comma. Special characters should not be used in keys, due to possible failures in proxies and gateways.

mTLS enabled API

The pipeline can have both the External API and Internal API options enabled at the same time, but it is recommended to leave them inactive. This parameter does not support API Key, JWT, or Basic Auth.

To use it in your realm, it is necessary to make a request via chat and we will send you the necessary information to install this service.

Additional API Routes

As previously explained, this option is to configure new routes in the endpoint.

When a pipeline is deployed, an URL is automatically created. However, you can customize the route according to your convenience. It also includes receiving parameters through the route.

After the pipeline's deployment, the URL will get the following structure:

TEST:

or PROD:

{realm}: corresponds to Realm.

v{n}: pipeline's major version.

{pipeline-name}: name given to the pipeline.

Custom static route

Let’s say you’ve created the product-list pipeline. Considering the comment above, its URL would have the following appearance:

Now, see how to configure a static route for this case.

With the configurations applied and the pipeline deployed, you will get a new URL:

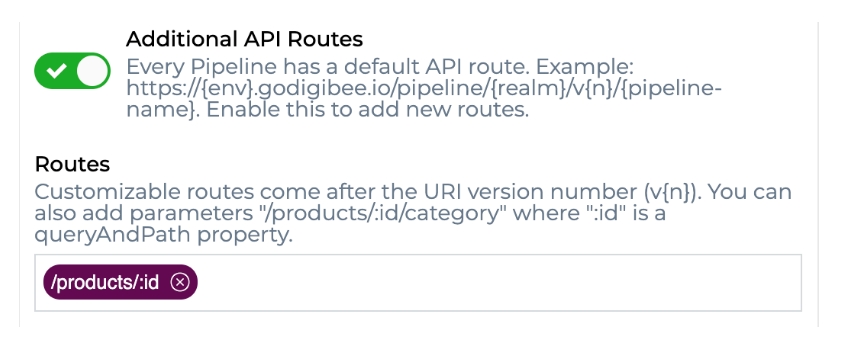

Custom route with parameter in the path

Using as example the same pipeline previously configured, see how to configure the route:

With the configurations applied and the pipeline deployed, you will get a new URL:

In this case, the endpoint consumer can send a request with the id of a product and return information about it only. Example of URL in the request:

To use this value sent by the route inside the pipeline, go for the Double Braces syntax:

Remove Digibee Prefix from Route

As previously explained, this option is recommended for removing the default Digibee route prefix from pipeline route.

Let’s say you’ve created a pipeline and set the trigger as follows:

With the configurations applied and the pipeline deployed, you will get a new URL:

Rate Limit

When creating APIs, we usually want to limit the number of API requests users can make in a given time interval.

This action can be performed by activating the Rate Limit option and applying the following settings:

If the API has additional paths, the limit is shared among all paths. To apply the rate limit settings, the API must be configured with an API key or Basic Auth so that the Aggregate by parameter can be used by groups of credentials if the Consumer option is selected, or by an individual credential if the Credential (API Key, Basic Auth) option is selected.

If multiple interval parameters are configured with repeating values, only one of these values is considered. It’s also necessary that a value greater than zero be informed for the Limit parameter.

If the rate limiting options aren't set correctly, they'll be ignored and a warning log will be issued. You can view this log on the Pipeline Logs page.

REST Trigger in Action

See below how the trigger behaves in specific situations and what its respective configuration is.

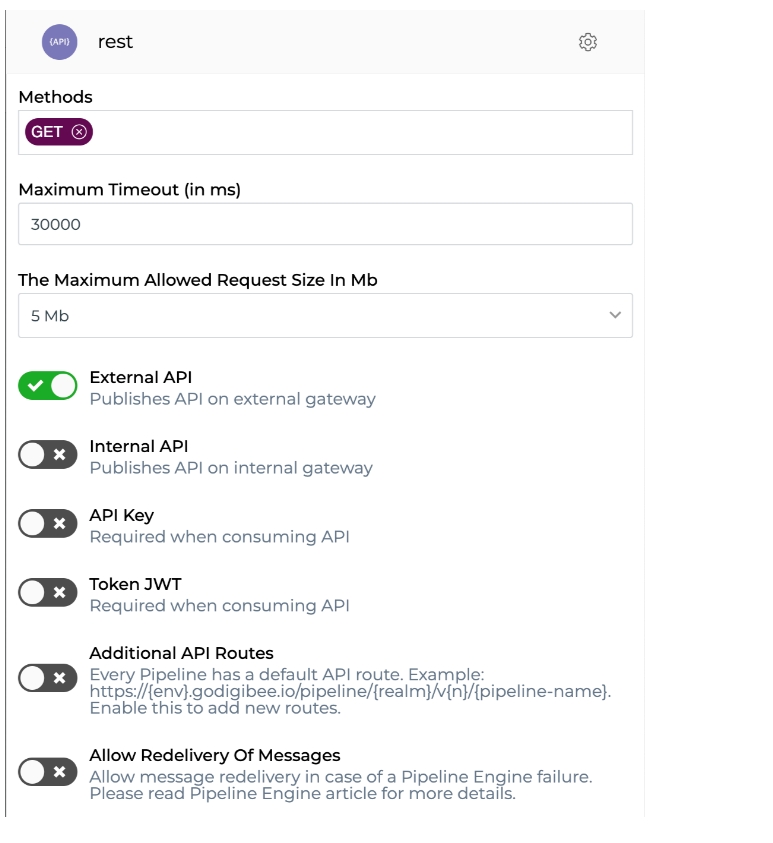

Query API of information with response in JSON

See how to configure a pipeline with REST Trigger to return information inside the pipeline in JSON format and how the return must be treated specifically for this trigger.

First of all, create a new pipeline and configure the trigger. The configuration can be made in the following way:

With the configurations above, you determine that:

the endpoint works with the verb GET only.

Besides, you determine that the API is external and doesn’t need a token for the communication.

Now see how to configure a MOCK in the pipeline so it becomes the data provider that the endpoint returns in the end. Place the indicated component, connect it to the trigger and configure it with the following JSON:

After doing that, the endpoint will already automatically return the JSON defined above as the endpoint response.

After the deployment, take the generated url and send a GET-type request. The endpoint must return the status-code 200 and the response body must have the same appearance of the JSON we previously defined inside the MOCK component.

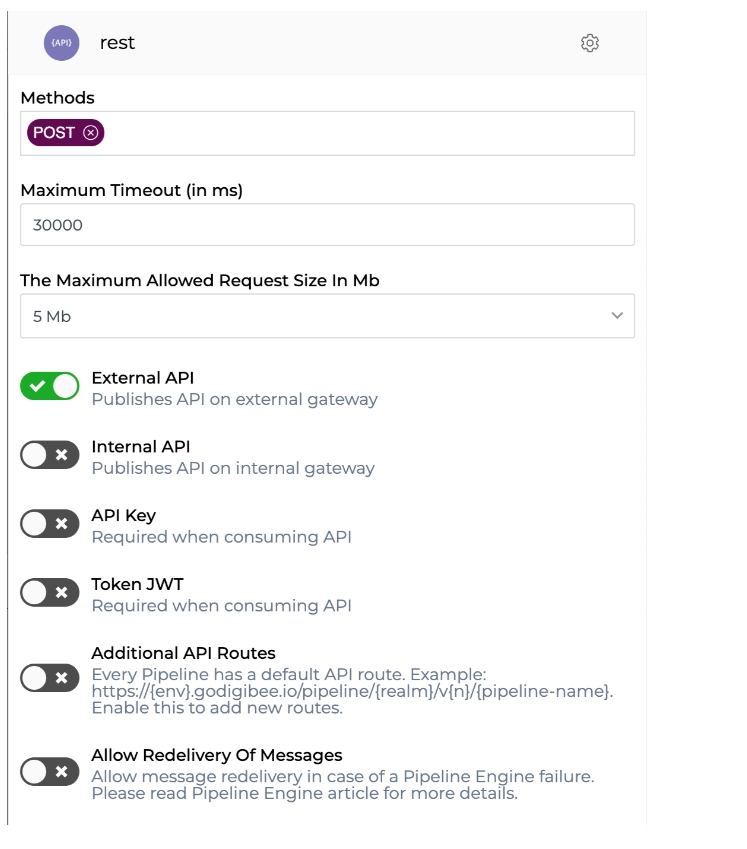

Dispatch API of information with response in JSON

See how to configure a pipeline with REST Trigger to return information inside the pipeline in JSON format and how the return must be treated specifically for this trigger.

First of all, create a new pipeline and configure the trigger. The configuration can be made in the following way:

With the configurations above, you determine that:

the endpoint works with the verb POST only.

Besides, you determine that the API is external and doesn’t need a token for the communication.

Now see how to configure a MOCK in the pipeline so it changes the received data and the endpoint will return in the end. Place the indicated component, connect it to the trigger and configure it with the following JSON:

With this configuration, a payload with a new product will be received and it will be added to the array. After that, the pipeline will return the array with the new added product to the consumer.

After the deployment, take the generated url and send a POST-type request with the following body:

The endpoint must return the status-code 200 and the response body must have the following appearance:

Everytime you make a request to the created endpoint, the structure of the message that the trigger delivers to the pipeline is always the same and follows this pattern:

body: content to be sent in the request payload to be transformed into string in this field.

form: if the form-data is used in the request, the sent data is delivered in this field.

headers: the headers sent in the request are delivered in this field, but some are automatically filled according to the tool used to make the request.

queryAndPath: the query and path parameters provided in the URL are delivered in this field.

method: HTTP method used in the request to be delivered in this field.

contentType: when informed in the request, the Content-type value is repassed to the pipeline inside this field.

path: the path used in the URL in the request is repassed to this field.

Last updated

Was this helpful?